这是目前最后一篇关于 TF 的了,话说终于到这篇了……

0x00.前言

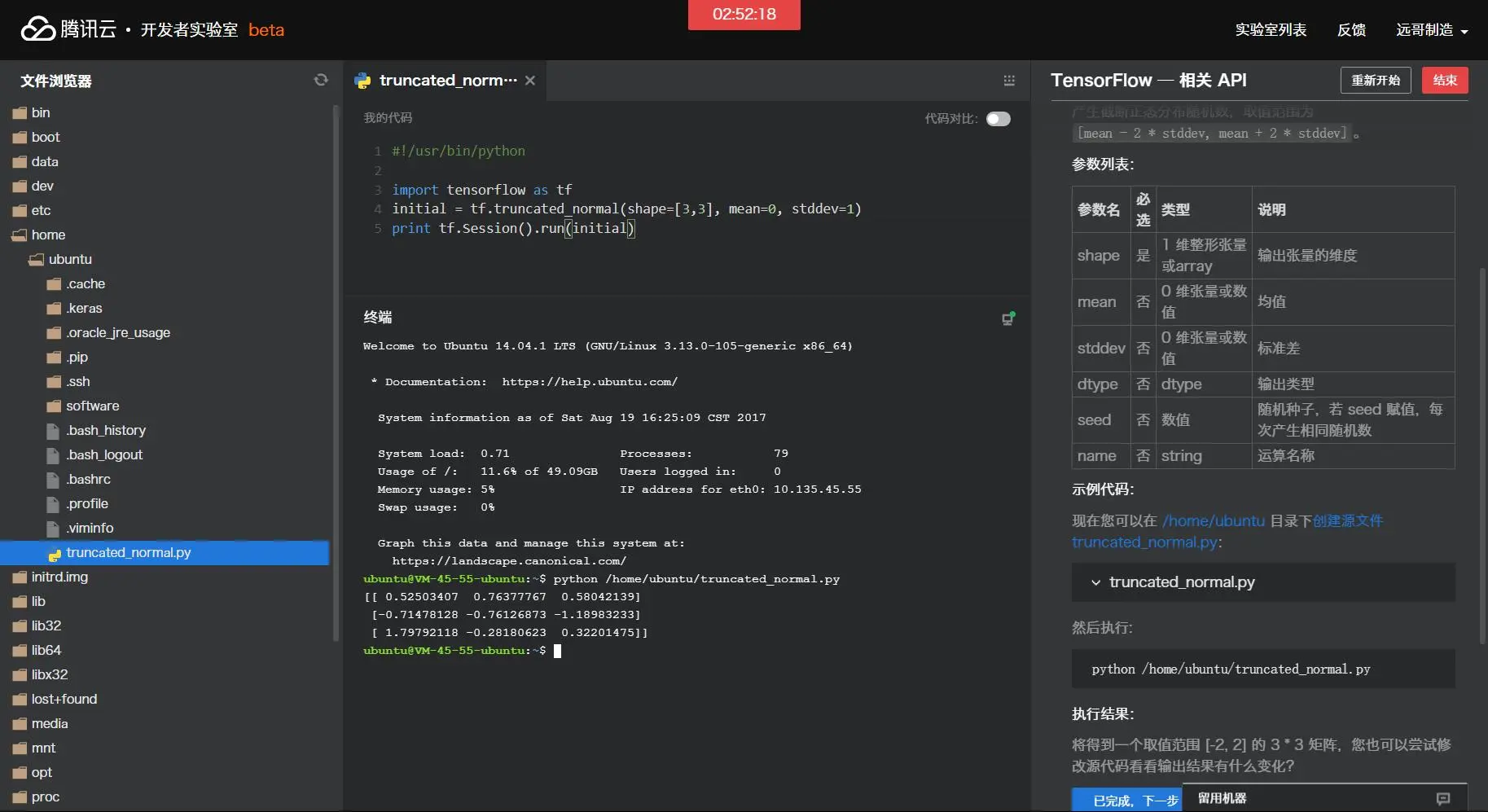

0x01.引用 1.0 TensorFlow相关函数理解 1.1 tf.truncated_normal 1 2 3 4 5 6 7 8 truncated_normal( shape, mean=0.0 , stddev=1.0 , dtype=tf.float32, seed=None , name=None )

功能说明:产生截断正态分布随机数,取值范围为[mean - 2 * stddev, mean + 2 * stddev]

参数名 必选 类型 说明 shape是 1 维整形张量或array 输出张量的维度 mean否 0 维张量或数值 均值 stddev否 0 维张量或数值 标准差 dtype否 dtype输出类型 seed否 数值 随机种子,若seed赋值,每次产生相同随机数 name否 string运算名称

现在您可以在/home/ubuntu目录下创建源文件truncated_normal.py:

1 2 3 4 5 import tensorflow as tfinitial = tf.truncated_normal(shape=[3 ,3 ], mean=0 , stddev=1 ) print tf.Session().run(initial)

然后执行:python /home/ubuntu/truncated_normal.py[-2, 2]的3 * 3矩阵

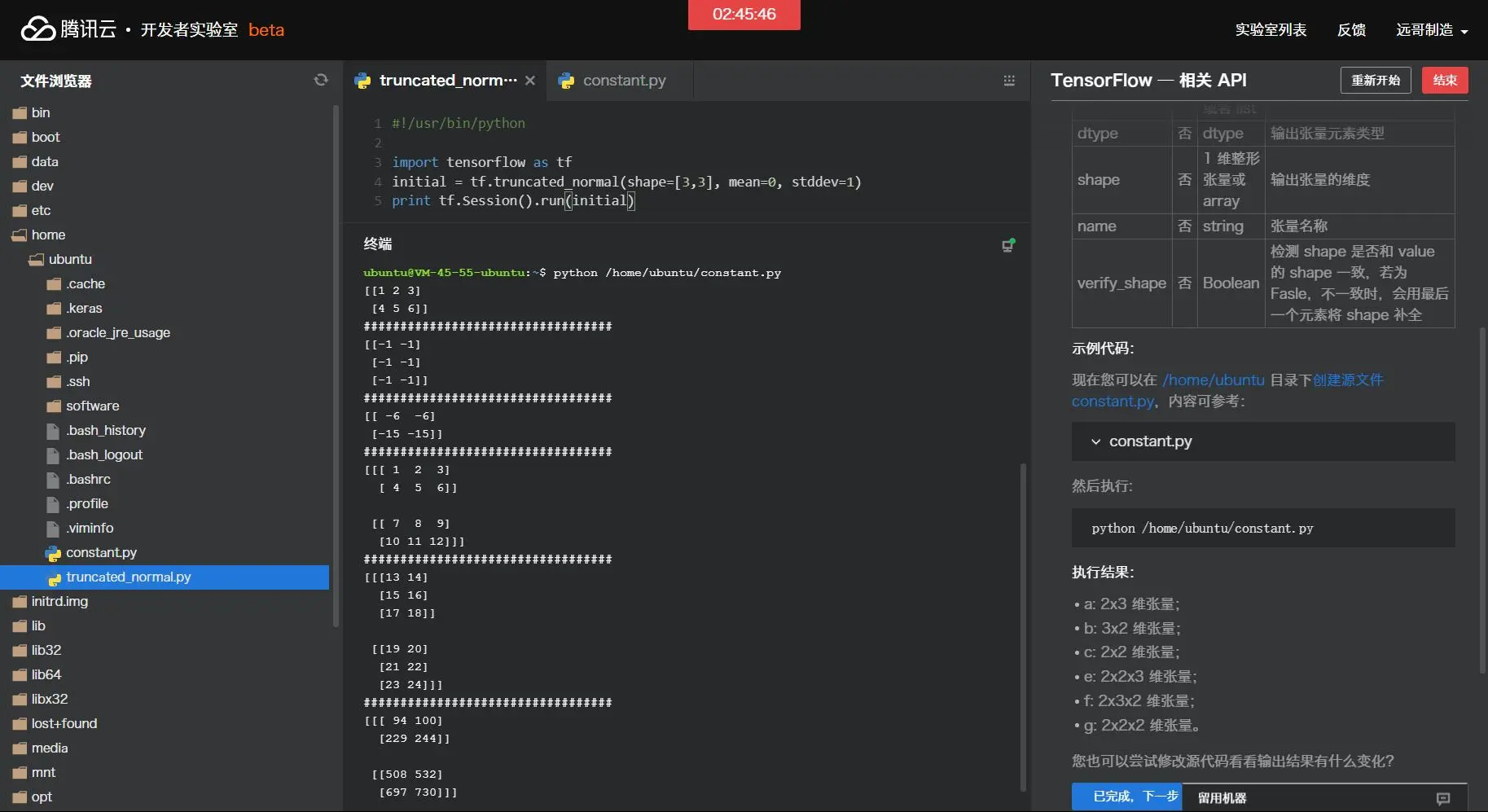

1.2 tf.constant 1 2 3 4 5 6 7 constant( value, dtype=None , shape=None , name='Const' , verify_shape=False )

功能说明:根据value的值生成一个shape维度的常量张量

参数名 必选 类型 说明 value是 常量数值或者list 输出张量的值 dtype否 dtype输出张量元素类型 shape否 1 维整形张量或array 输出张量的维度 name否 string张量名称 verify_shape否 Boolean检测shape是否和value的shape一致,若为False,不一致时,会用最后一个元素将shape补全

现在您可以在/home/ubuntu目录下创建源文件constant.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 import tensorflow as tfimport numpy as npa = tf.constant([1 ,2 ,3 ,4 ,5 ,6 ],shape=[2 ,3 ]) b = tf.constant(-1 ,shape=[3 ,2 ]) c = tf.matmul(a,b) e = tf.constant(np.arange(1 ,13 ,dtype=np.int32),shape=[2 ,2 ,3 ]) f = tf.constant(np.arange(13 ,25 ,dtype=np.int32),shape=[2 ,3 ,2 ]) g = tf.matmul(e,f) with tf.Session() as sess: print sess.run(a) print ("##################################" ) print sess.run(b) print ("##################################" ) print sess.run(c) print ("##################################" ) print sess.run(e) print ("##################################" ) print sess.run(f) print ("##################################" ) print sess.run(g)

然后执行:python /home/ubuntu/constant.py

1 2 3 4 5 6 a: 2x3 维张量; b: 3x2 维张量; c: 2x2 维张量; e: 2x2x3 维张量; f: 2x3x2 维张量; g: 2x2x2 维张量。

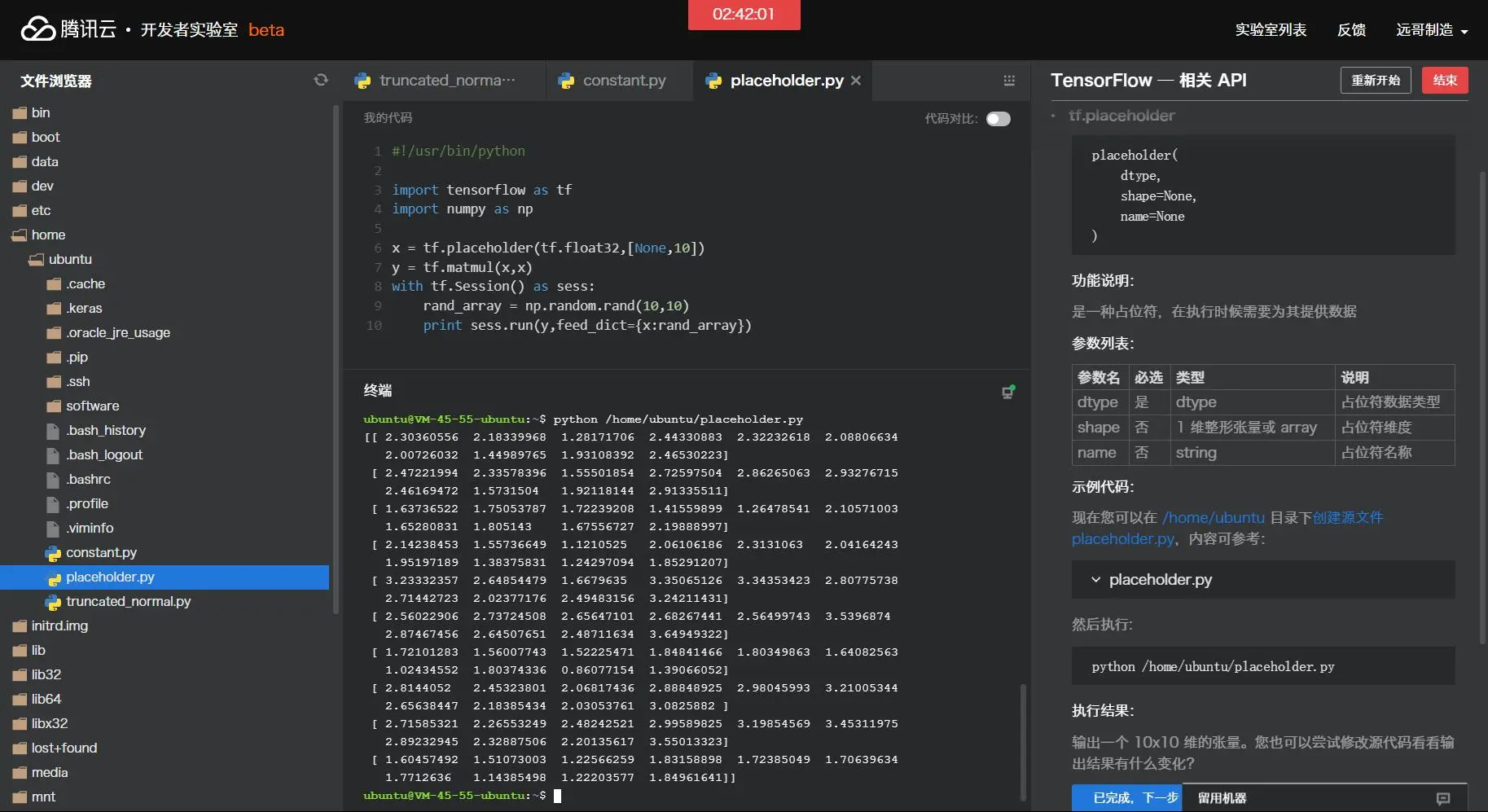

1.3 tf.placeholder 1 2 3 4 5 placeholder( dtype, shape=None , name=None )

功能说明:是一种占位符,在执行时候需要为其提供数据

参数名 必选 类型 说明 dtype是 dtype占位符数据类型 shape否 1 维整形张量或array 占位符维度 name否 string占位符名称

现在您可以在/home/ubuntu目录下创建源文件placeholder.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 import tensorflow as tfimport numpy as npx = tf.placeholder(tf.float32,[None ,10 ]) y = tf.matmul(x,x) with tf.Session() as sess: rand_array = np.random.rand(10 ,10 ) print sess.run(y,feed_dict={x:rand_array})

然后执行:python /home/ubuntu/placeholder.py10x10维的张量

1.4 tf.nn.bias_add 1 2 3 4 5 6 bias_add( value, bias, data_format=None , name=None )

功能说明:将偏差项bias加到value上面,可以看做是tf.add的一个特例,其中bias必须是一维的,并且维度和value的最后一维相同,数据类型必须和value相同

参数名 必选 类型 说明 value是 张量 数据类型为 float, double, int64, int32, uint8, int16, int8, complex64, or complex128 bias是 1 维张量 维度必须和value最后一维维度相等 data_format否 string数据格式,支持NHWC和NCHW name否 string运算名称

现在您可以在/home/ubuntu目录下创建源文件bias_add.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 import tensorflow as tfimport numpy as npa = tf.constant([[1.0 , 2.0 ],[1.0 , 2.0 ],[1.0 , 2.0 ]]) b = tf.constant([2.0 ,1.0 ]) c = tf.constant([1.0 ]) sess = tf.Session() print sess.run(tf.nn.bias_add(a, b)) print ("##################################" )print sess.run(tf.add(a, b))print ("##################################" )print sess.run(tf.add(a, c))

然后执行:python /home/ubuntu/bias_add.py3个3x2维张量

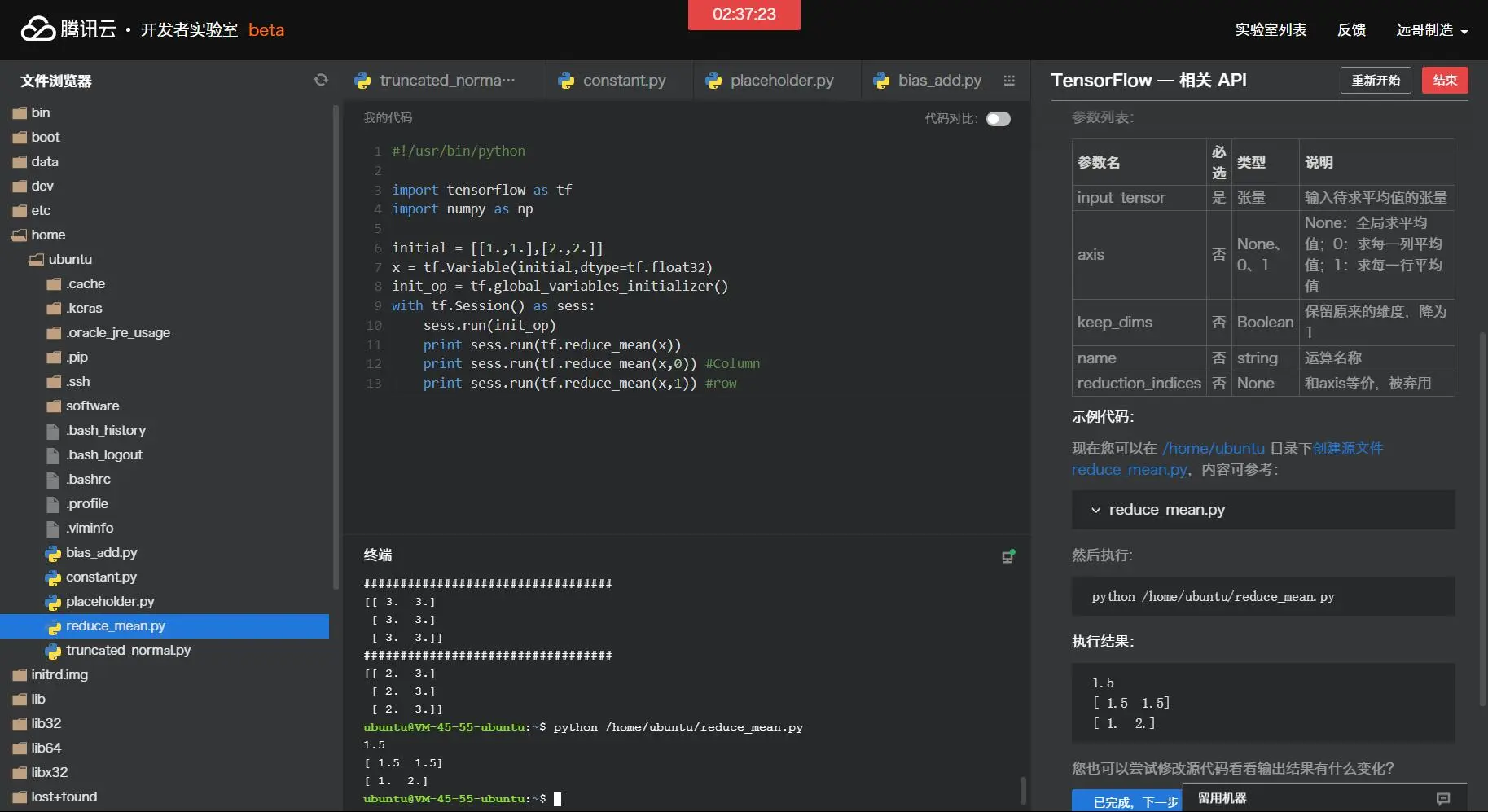

1.5 tf.reduce_mean 1 2 3 4 5 6 7 reduce_mean( input_tensor, axis=None , keep_dims=False , name=None , reduction_indices=None )

功能说明:计算张量input_tensor平均值

参数名 必选 类型 说明 input_tensor是 张量 输入待求平均值的张量 axis否 None、0、1None:全局求平均值;0:求每一列平均值;1:求每一行平均值keep_dims否 Boolean保留原来的维度,降为1 name否 string运算名称 reduction_indices否 None和axis等价,被弃用

现在您可以在/home/ubuntu目录下创建源文件reduce_mean.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 import tensorflow as tfimport numpy as npinitial = [[1. ,1. ],[2. ,2. ]] x = tf.Variable(initial,dtype=tf.float32) init_op = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init_op) print sess.run(tf.reduce_mean(x)) print sess.run(tf.reduce_mean(x,0 )) print sess.run(tf.reduce_mean(x,1 ))

然后执行:python /home/ubuntu/reduce_mean.py

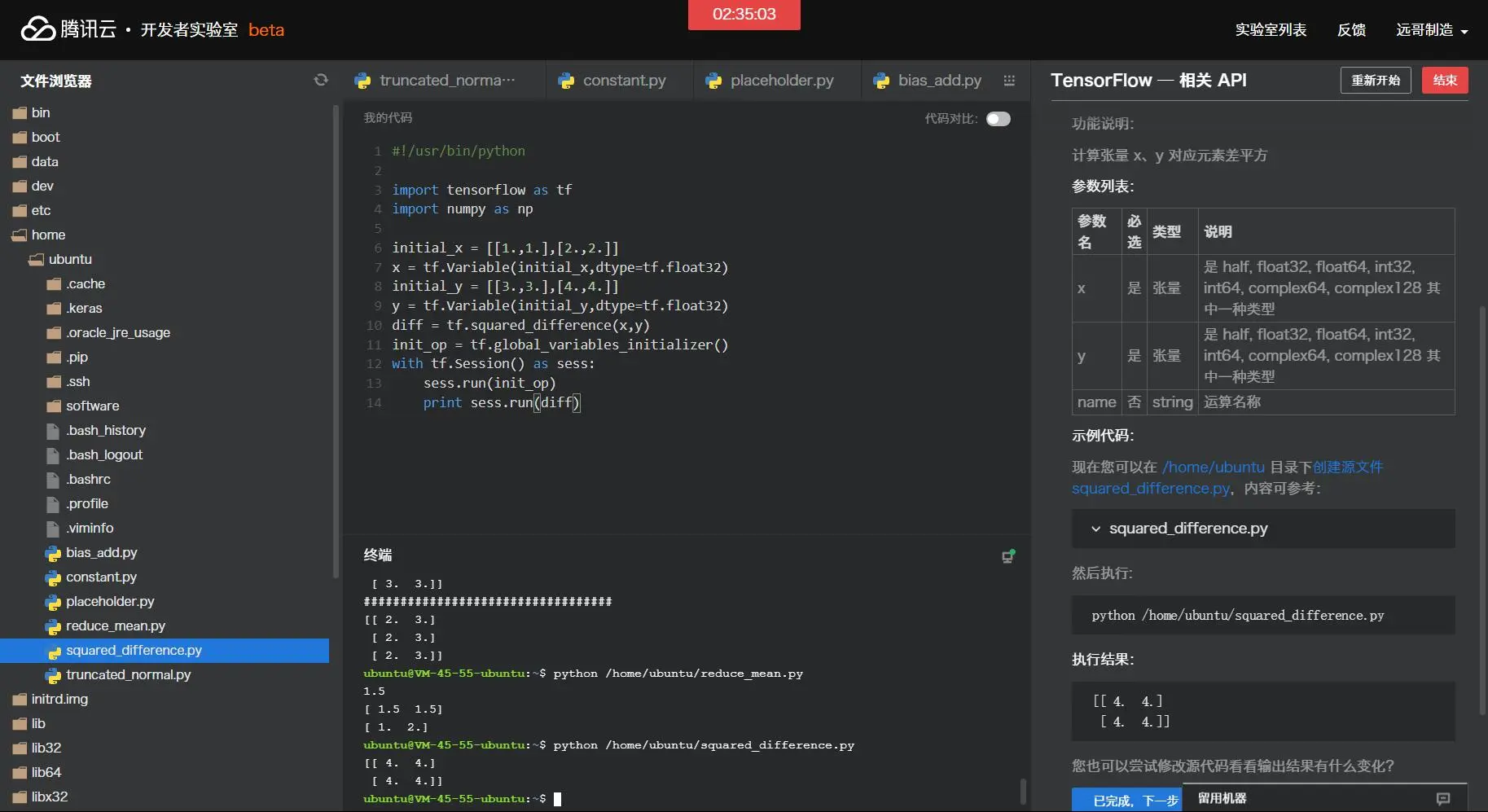

1.6 tf.squared_difference 1 2 3 4 5 squared_difference( x, y, name=None )

功能说明:计算张量x、y对应元素差平方

参数名 必选 类型 说明 x是 张量 是half, float32, float64, int32, int64, complex64, complex128其中一种类型 y是 张量 是half, float32, float64, int32, int64, complex64, complex128其中一种类型 name否 string运算名称

现在您可以在/home/ubuntu目录下创建源文件squared_difference.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 import tensorflow as tfimport numpy as npinitial_x = [[1. ,1. ],[2. ,2. ]] x = tf.Variable(initial_x,dtype=tf.float32) initial_y = [[3. ,3. ],[4. ,4. ]] y = tf.Variable(initial_y,dtype=tf.float32) diff = tf.squared_difference(x,y) init_op = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init_op) print sess.run(diff)

然后执行:python /home/ubuntu/squared_difference.py

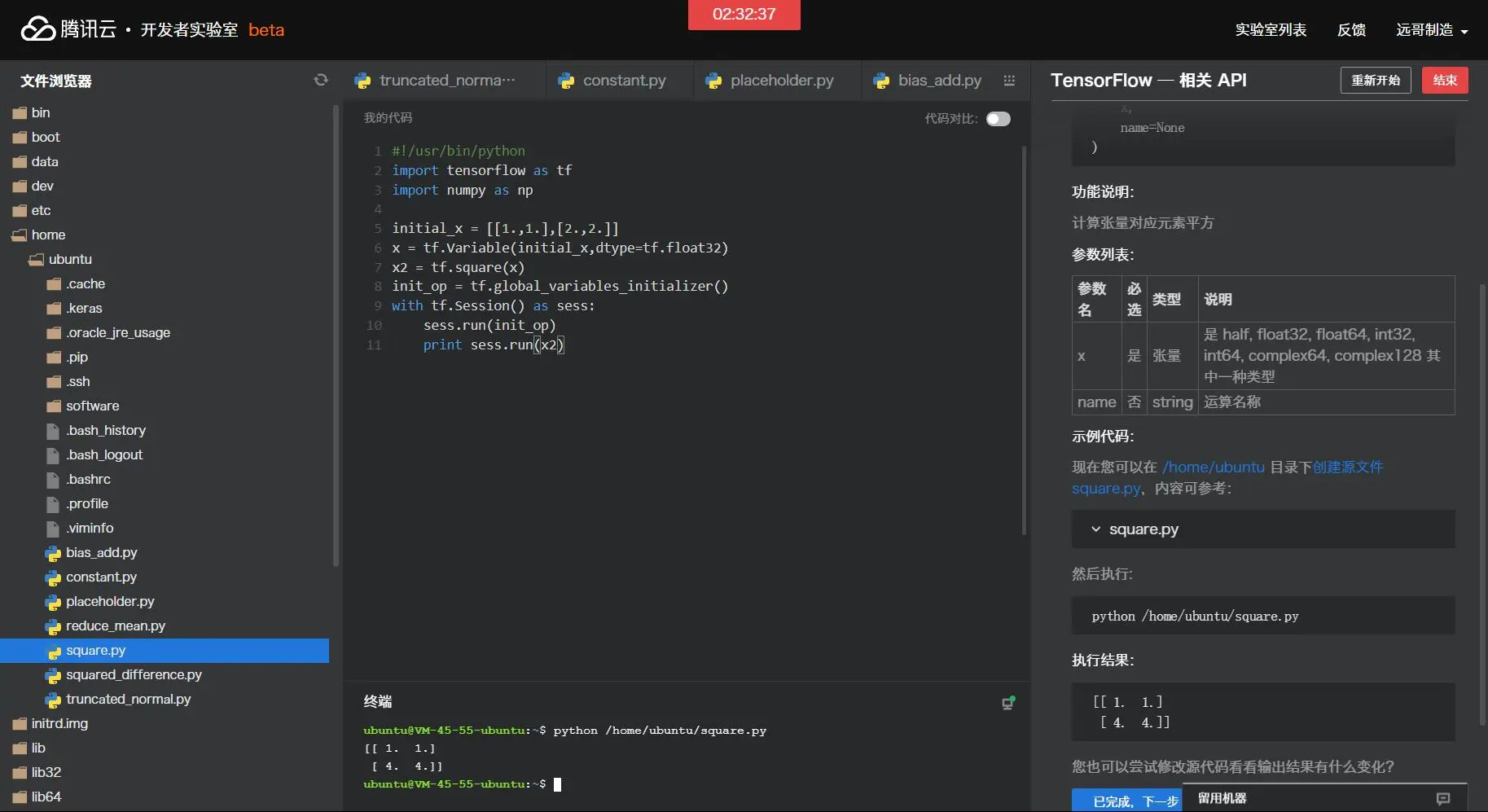

1.7 tf.square 1 2 3 4 square( x, name=None )

功能说明:计算张量对应元素平方

参数名 必选 类型 说明 x是 张量 是half, float32, float64, int32, int64, complex64, complex128其中一种类型 name否 string运算名称

现在您可以在/home/ubuntu目录下创建源文件square.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 import tensorflow as tfimport numpy as npinitial_x = [[1. ,1. ],[2. ,2. ]] x = tf.Variable(initial_x,dtype=tf.float32) x2 = tf.square(x) init_op = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init_op) print sess.run(x2)

然后执行:python /home/ubuntu/square.py

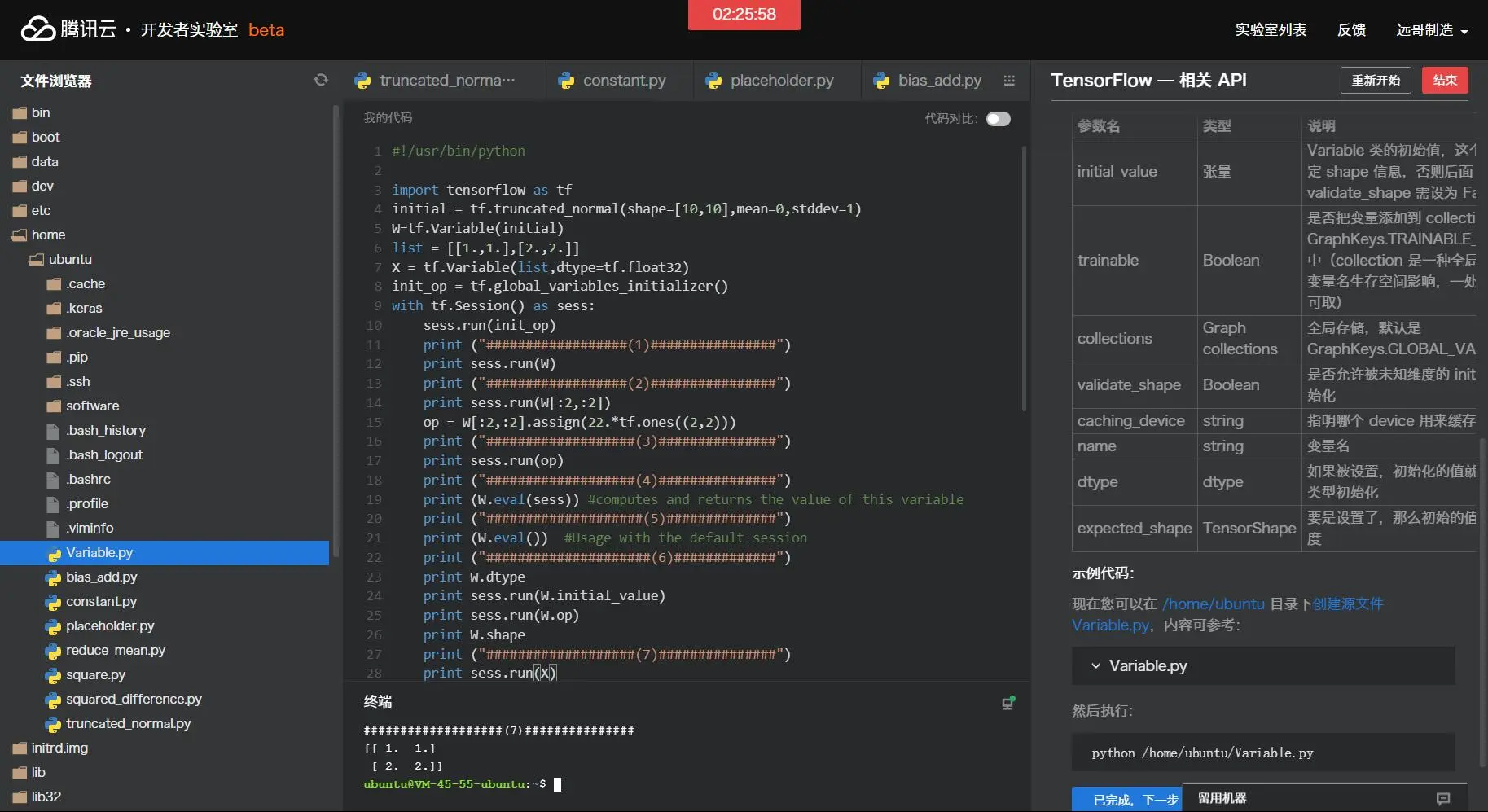

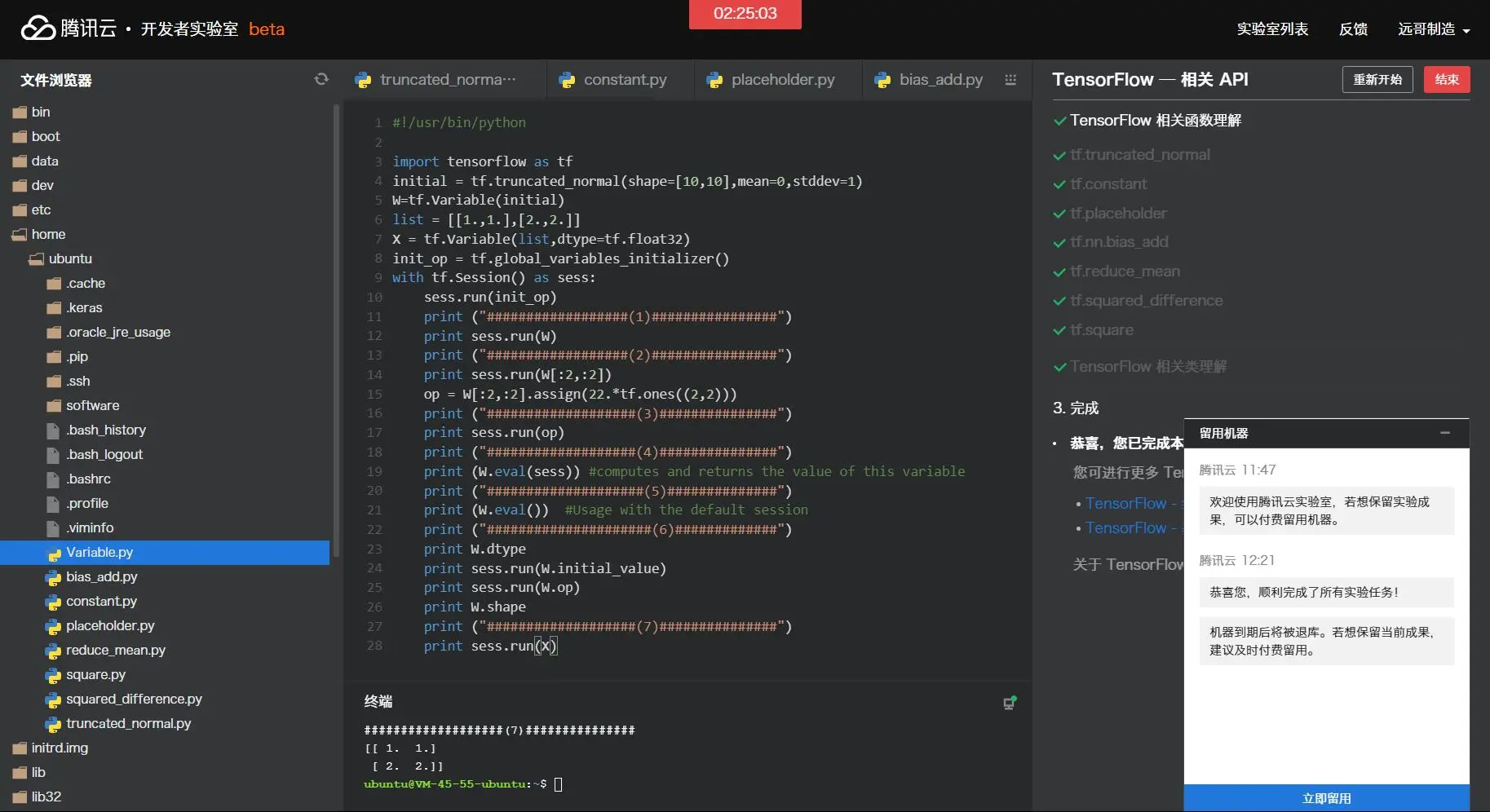

2.0 TensorFlow相关类理解 2.1 tf.Variable 1 2 3 4 5 6 7 8 9 10 11 12 __init__( initial_value=None , trainable=True , collections=None , validate_shape=True , caching_device=None , name=None , variable_def=None , dtype=None , expected_shape=None , import_scope=None )

功能说明:维护图在执行过程中的状态信息,例如神经网络权重值的变化

参数名 类型 说明 initial_value张量 Variable类的初始值,这个变量必须指定shape信息,否则后面validate_shape需设为FalsetrainableBoolean是否把变量添加到 collection GraphKeys.TRAINABLE_VARIABLES 中(collection 是一种全局存储,不受变量名生存空间影响,一处保存,到处可取) collectionsGraph collections全局存储,默认是GraphKeys.GLOBAL_VARIABLES validate_shapeBoolean是否允许被未知维度的initial_value初始化 caching_devicestring指明哪个device用来缓存变量 namestring变量名 dtypedtype如果被设置,初始化的值就会按照这个类型初始化 expected_shapeTensorShape要是设置了,那么初始的值会是这种维度

现在您可以在/home/ubuntu目录下创建源文件Variable.py,内容可参考:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 import tensorflow as tfinitial = tf.truncated_normal(shape=[10 ,10 ],mean=0 ,stddev=1 ) W=tf.Variable(initial) list = [[1. ,1. ],[2. ,2. ]]X = tf.Variable(list ,dtype=tf.float32) init_op = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init_op) print ("##################(1)################" ) print sess.run(W) print ("##################(2)################" ) print sess.run(W[:2 ,:2 ]) op = W[:2 ,:2 ].assign(22. *tf.ones((2 ,2 ))) print ("###################(3)###############" ) print sess.run(op) print ("###################(4)###############" ) print (W.eval (sess)) print ("####################(5)##############" ) print (W.eval ()) print ("#####################(6)#############" ) print W.dtype print sess.run(W.initial_value) print sess.run(W.op) print W.shape print ("###################(7)###############" ) print sess.run(X)

然后执行:python /home/ubuntu/Variable.py

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 ubuntu@VM-45-55-ubuntu:~$ python /home/ubuntu/Variable.py ##################(1)################ [[-0.05158469 0.42488426 -1.06051874 0.05041981 -0.59257025 0.75912011 0.13238901 1.4264127 0.3660301 -0.34660342] [-0.58076793 -0.34156471 1.80603182 -0.63527924 -1.37761962 0.23985045 -0.9572925 0.5855329 -1.52534127 0.66485882] [ 0.95287526 -0.52085191 -0.6662432 0.92799437 -0.14051931 0.77191192 -0.40517998 1.15190434 -0.67737275 -0.49324712] [ 0.13710392 -0.26966634 -0.31862086 0.62378079 0.99250805 1.79186082 0.24381292 -0.65113115 -0.31242973 0.96655703] [ 1.51818967 1.4847064 -1.04498291 -1.19972205 1.12664723 0.45897952 1.30146337 -0.07071129 1.28198421 -0.07462779] [ 0.06365386 -1.37174654 -0.45393857 0.44872424 0.30701965 -0.33525467 1.23019528 0.2688064 -0.77721894 1.15218246] [ 0.5284161 -0.57362115 -1.31496811 0.557841 1.38116109 1.11097515 1.79387271 1.03924 -0.43662316 1.2135427 ] [ 0.12842607 0.55358696 0.50601929 0.15238616 0.30852544 -0.07885797 -0.18290153 -0.65053511 0.06731477 -1.81053722] [ 0.0353244 -0.61836213 -0.02346812 0.73654675 1.96743298 -1.1408062 1.58433104 -0.50077403 -1.70408487 -0.78402525] [-0.3279908 0.34578505 -0.4665527 0.71424776 0.48050362 -0.6924966 0.05213421 -0.02890863 1.6275624 -1.1187917 ]] ##################(2)################ [[-0.05158469 0.42488426] [-0.58076793 -0.34156471]] ###################(3)############### [[ 22. 22. -1.06051874 0.05041981 -0.59257025 0.75912011 0.13238901 1.4264127 0.3660301 -0.34660342] [ 22. 22. 1.80603182 -0.63527924 -1.37761962 0.23985045 -0.9572925 0.5855329 -1.52534127 0.66485882] [ 0.95287526 -0.52085191 -0.6662432 0.92799437 -0.14051931 0.77191192 -0.40517998 1.15190434 -0.67737275 -0.49324712] [ 0.13710392 -0.26966634 -0.31862086 0.62378079 0.99250805 1.79186082 0.24381292 -0.65113115 -0.31242973 0.96655703] [ 1.51818967 1.4847064 -1.04498291 -1.19972205 1.12664723 0.45897952 1.30146337 -0.07071129 1.28198421 -0.07462779] [ 0.06365386 -1.37174654 -0.45393857 0.44872424 0.30701965 -0.33525467 1.23019528 0.2688064 -0.77721894 1.15218246] [ 0.5284161 -0.57362115 -1.31496811 0.557841 1.38116109 1.11097515 1.79387271 1.03924 -0.43662316 1.2135427 ] [ 0.12842607 0.55358696 0.50601929 0.15238616 0.30852544 -0.07885797 -0.18290153 -0.65053511 0.06731477 -1.81053722] [ 0.0353244 -0.61836213 -0.02346812 0.73654675 1.96743298 -1.1408062 1.58433104 -0.50077403 -1.70408487 -0.78402525] [ -0.3279908 0.34578505 -0.4665527 0.71424776 0.48050362 -0.6924966 0.05213421 -0.02890863 1.6275624 -1.1187917 ]] ###################(4)############### [[ 22. 22. -1.06051874 0.05041981 -0.59257025 0.75912011 0.13238901 1.4264127 0.3660301 -0.34660342] [ 22. 22. 1.80603182 -0.63527924 -1.37761962 0.23985045 -0.9572925 0.5855329 -1.52534127 0.66485882] [ 0.95287526 -0.52085191 -0.6662432 0.92799437 -0.14051931 0.77191192 -0.40517998 1.15190434 -0.67737275 -0.49324712] [ 0.13710392 -0.26966634 -0.31862086 0.62378079 0.99250805 1.79186082 0.24381292 -0.65113115 -0.31242973 0.96655703] [ 1.51818967 1.4847064 -1.04498291 -1.19972205 1.12664723 0.45897952 1.30146337 -0.07071129 1.28198421 -0.07462779] [ 0.06365386 -1.37174654 -0.45393857 0.44872424 0.30701965 -0.33525467 1.23019528 0.2688064 -0.77721894 1.15218246] [ 0.5284161 -0.57362115 -1.31496811 0.557841 1.38116109 1.11097515 1.79387271 1.03924 -0.43662316 1.2135427 ] [ 0.12842607 0.55358696 0.50601929 0.15238616 0.30852544 -0.07885797 -0.18290153 -0.65053511 0.06731477 -1.81053722] [ 0.0353244 -0.61836213 -0.02346812 0.73654675 1.96743298 -1.1408062 1.58433104 -0.50077403 -1.70408487 -0.78402525] [ -0.3279908 0.34578505 -0.4665527 0.71424776 0.48050362 -0.6924966 0.05213421 -0.02890863 1.6275624 -1.1187917 ]] ####################(5)############## [[ 22. 22. -1.06051874 0.05041981 -0.59257025 0.75912011 0.13238901 1.4264127 0.3660301 -0.34660342] [ 22. 22. 1.80603182 -0.63527924 -1.37761962 0.23985045 -0.9572925 0.5855329 -1.52534127 0.66485882] [ 0.95287526 -0.52085191 -0.6662432 0.92799437 -0.14051931 0.77191192 -0.40517998 1.15190434 -0.67737275 -0.49324712] [ 0.13710392 -0.26966634 -0.31862086 0.62378079 0.99250805 1.79186082 0.24381292 -0.65113115 -0.31242973 0.96655703] [ 1.51818967 1.4847064 -1.04498291 -1.19972205 1.12664723 0.45897952 1.30146337 -0.07071129 1.28198421 -0.07462779] [ 0.06365386 -1.37174654 -0.45393857 0.44872424 0.30701965 -0.33525467 1.23019528 0.2688064 -0.77721894 1.15218246] [ 0.5284161 -0.57362115 -1.31496811 0.557841 1.38116109 1.11097515 1.79387271 1.03924 -0.43662316 1.2135427 ] [ 0.12842607 0.55358696 0.50601929 0.15238616 0.30852544 -0.07885797 -0.18290153 -0.65053511 0.06731477 -1.81053722] [ 0.0353244 -0.61836213 -0.02346812 0.73654675 1.96743298 -1.1408062 1.58433104 -0.50077403 -1.70408487 -0.78402525] [ -0.3279908 0.34578505 -0.4665527 0.71424776 0.48050362 -0.6924966 0.05213421 -0.02890863 1.6275624 -1.1187917 ]] #####################(6)############# <dtype: 'float32_ref'> [[ 0.35549659 -0.92845166 0.7202518 -1.08173835 -0.56052214 -1.79995739 -1.23022497 1.78744531 0.26768067 1.44654143] [ 1.00125992 0.88891822 -0.83442372 -0.51755071 0.93480241 -0.62580359 -0.42888054 0.60265911 0.23383677 0.25027233] [ 0.62767732 1.49130106 0.11455932 0.8136881 0.1653619 -0.03023815 -0.81600904 0.21061133 0.77372617 -1.05311072] [ 0.37356022 0.80606896 -0.77602631 1.7510792 1.17032671 -1.59365809 0.81380212 -0.80985826 -0.5826512 -0.68983918] [ 1.5539794 -0.82919389 -0.37634259 -0.04195082 0.00483348 -1.6610924 1.61947238 0.44739676 0.96909785 0.30437273] [-1.67946744 0.13453422 1.16949022 -1.07361639 0.16278958 0.48993936 0.79800332 -0.59556031 1.02015698 0.61534965] [ 1.91761112 0.57116741 -1.32458746 -0.83711451 -0.23092926 0.09989663 -0.13043015 0.39024881 -0.39114812 -1.34013951] [ 0.42324749 1.76086545 -1.64871371 -0.25146225 0.56552815 -0.22099398 0.3763651 -0.26513788 0.09395658 -0.51482815] [-1.58338928 0.34144643 -0.60781646 -0.3217389 -0.36381459 -0.09845187 -0.86982977 0.56992447 0.35818082 -1.13524997] [-1.17181849 0.15299995 -0.94315332 0.3065263 -0.33332458 1.59554768 0.27707765 0.4924351 1.13253677 -0.55417466]] None (10, 10) ###################(7)############### [[ 1. 1.] [ 2. 2.]]

0x02.后记 emmm……刚开始感觉好慢,到后来猝不及防就没了……

未完待续……